AWS infrastructure overview¶

This infrastructure is one of the ways to handle your Yocto builds in the cloud. We are using Gitlab runner and AWS autoscaling capabilities to have an infrastructure adapting to the needs.

You can find the project to build the infrastructure here.

This page gives details on how the infrastructure works:

To get details on how to use the infrastruture, see the usage page.

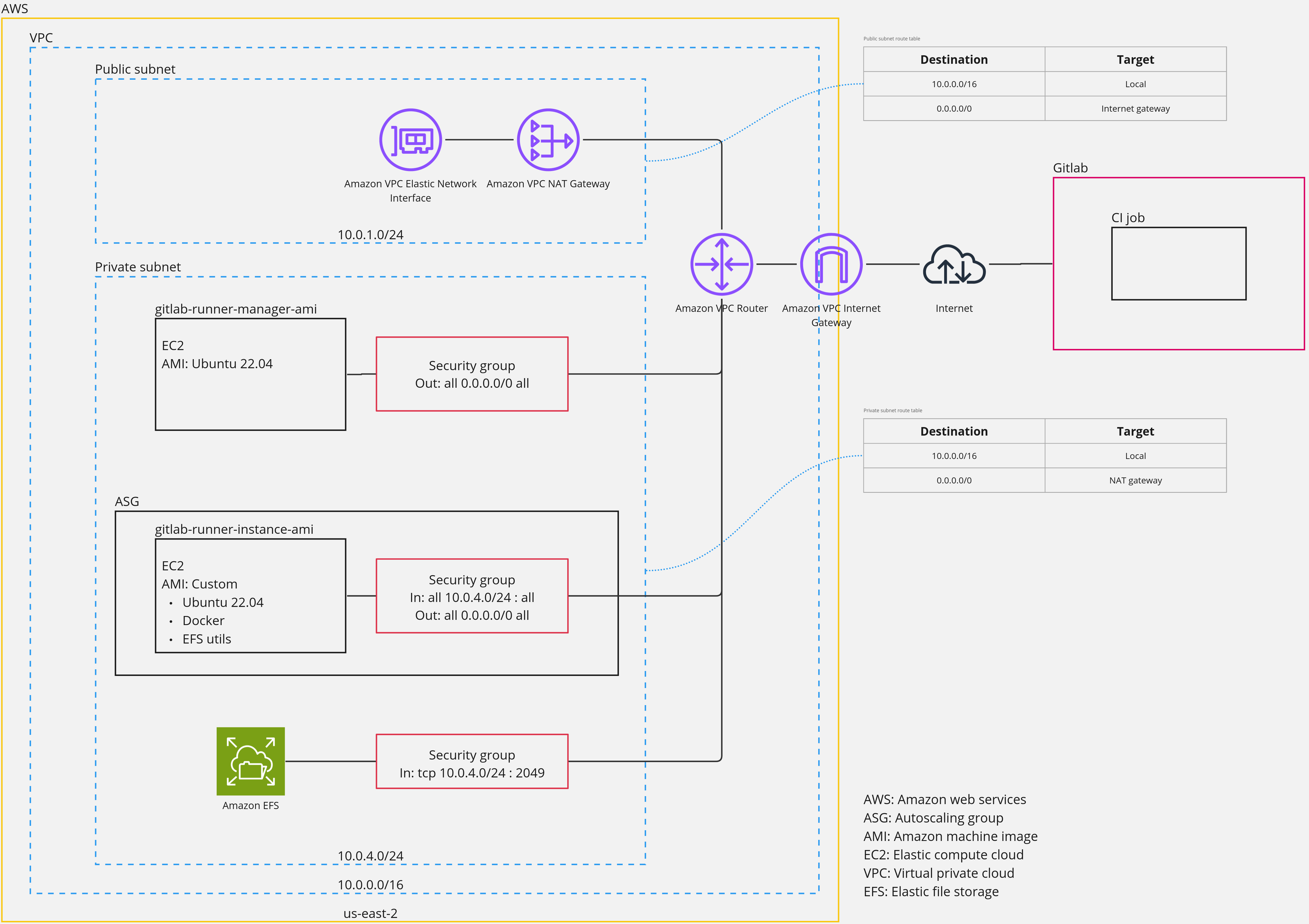

Architecture¶

Features¶

The main concept is that there is a Gitlab runner instance that gets a job from Gitlab. This instance will request the autoscaling group to create a build instance, then the runner connects to the build instance and runs the job on it. When the job is done, the runner instance will request the autoscaling group to delete the created instance.

Gitlab runner instance¶

This instance role is to gather Gitlab jobs and manage build instances. Our runner is using the Docker Autoscaler executor with the aws fleeting plugin.

Build instances¶

These instances are going to be created in the autoscaling group and assigned a job to perform. When the job is completed, the instance will be destroyed.

AWS Identity and Access Management (IAM) roles¶

We have 3 different roles in order to give the proper permissions to each instances.

Runner The Gitlab runner EC2 instance has the Simple Systems Manager (SSM) permissions so that we are able to connect to the instance for debug purposes. It also has the permission to assume the following fleeting role.

Fleeting plugin role This role includes permissions to control the autoscaling group and the associated EC2 instances. It will be assumed by the Gitlab fleeting plugin on the runner instance.

Build instances The role for these intances only has the SSM permissions so that we are able to connect to the instance.

Networking¶

We have one VPC, with two subnets a public one and a private one. VPC region: us-east-2 VPC IP range: 10.0.0.0/16 Public subnet IP range: 10.0.1.0/24 Private subnet IP range: 10.0.4.0/24

The runner instance, build instances and EFS storage are located in the private subnet for security reasons. Outgoing traffic from the private subnet is going through the NAT gateway and then the internet gateway to reach the internet.

Public subnet routes This route is directing this subnet's outgoing traffing through the internet gateway.

| Destination | Target |

|---|---|

| 10.0.0.0/16 | Local |

| 0.0.0.0/0 | Internet Gateway |

Private subnet routes This route is directing this subnet's outgoing traffing through the NAT gateway.

| Destination | Target |

|---|---|

| 10.0.0.0/16 | Local |

| 0.0.0.0/0 | NAT Gateway |

Security¶

We have 3 security groups, one for the gitlab runner instance, one for the build instances and one for the EFS.

Gitlab runner No inbound traffic is allowed, all outbound traffic is allowed and will go through the NAT gateway.

| Direction | Protocol | Port | Source |

|---|---|---|---|

| Out | All | All | 0.0.0.0/0 |

Build instances Inbound traffic is only allowed when coming from the private subnet. All outbound traffic is allowed and will go through the NAT gateway.

| Direction | Protocol | Port | Source |

|---|---|---|---|

| In | All | All | 10.0.4.0/24 |

| Out | All | All | 0.0.0.0/0 |

EFS Inbound traffic is only allowed when coming from the private subnet (build instances) on a specific port (for build intances to read from the drive and write to it). Outbound traffic is not allowed.

| Direction | Protocol | Port | Source |

|---|---|---|---|

| In | TCP | 2049 | 10.0.4.0/24 |

Storage (Cache)¶

The EFS is mounted into build instances on boot and will be used as cache storage to allow for incremental Yocto builds. It has a restricted policy to only allow build instances to mount the drive and write to it.